42 soft labels deep learning

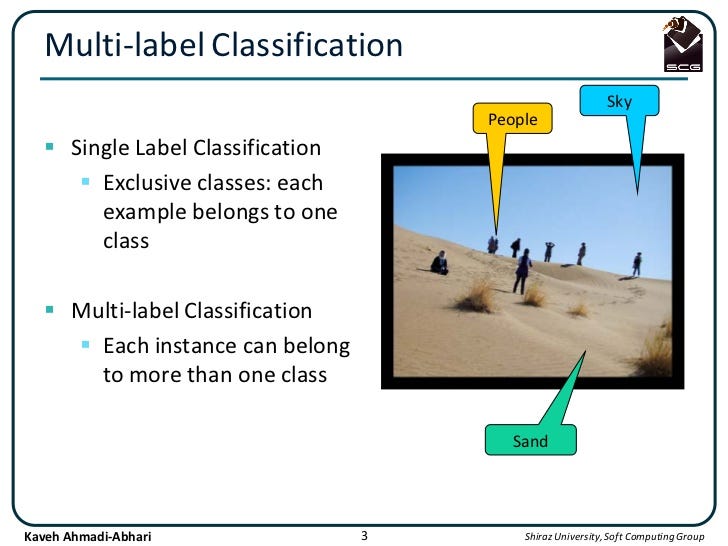

Learning Soft Labels via Meta Learning Learning Soft Labels via Meta Learning View publication Copy Bibtex One-hot labels do not represent soft decision boundaries among concepts, and hence, models trained on them are prone to overfitting. Using soft labels as targets provide regularization, but different soft labels might be optimal at different stages of optimization. What is the definition of "soft label" and "hard label"? A soft label is one which has a score (probability or likelihood) attached to it. So the element is a member of the class in question with probability/likelihood score of eg 0.7; this implies that an element can be a member of multiple classes (presumably with different membership scores), which is usually not possible with hard labels.

What is Label Smoothing? - Towards Data Science Label smoothing is used when the loss function is cross entropy, and the model applies the softmax function to the penultimate layer's logit vectors z to compute its output probabilities p. In this setting, the gradient of the cross entropy loss function with respect to the logits is simply ∇CE = p - y = softmax (z) - y

Soft labels deep learning

Meta Soft Label Generation for Noisy Labels - arxiv-vanity.com The existence of noisy labels in the dataset causes significant performance degradation for deep neural networks (DNNs). To address this problem, we propose a Meta Soft Label Generation algorithm called MSLG, which can jointly generate soft labels using meta-learning techniques and learn DNN parameters in an end-to-end fashion. Our approach adapts the meta-learning paradigm to estimate optimal ... Softmax Classifiers Explained - PyImageSearch Inside PyImageSearch University you'll find: 45+ courses on essential computer vision, deep learning, and OpenCV topics. 45+ Certificates of Completion. 52+ hours of on-demand video. Brand new courses released regularly, ensuring you can keep up with state-of-the-art techniques. MetaLabelNet: Learning to Generate Soft-Labels from Noisy-Labels - DeepAI Soft-labels are generated from extracted features of data instances, and the mapping function is learned by a single layer perceptron (SLP) network, which is called MetaLabelNet. Following, base classifier is trained by using these generated soft-labels. These iterations are repeated for each batch of training data.

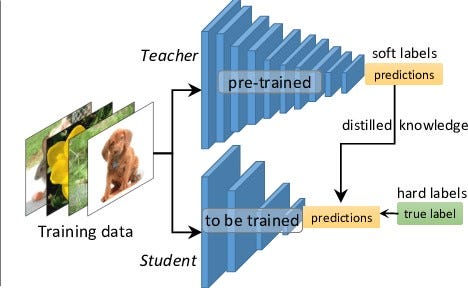

Soft labels deep learning. [2007.05836] Meta Soft Label Generation for Noisy Labels The existence of noisy labels in the dataset causes significant performance degradation for deep neural networks (DNNs). To address this problem, we propose a Meta Soft Label Generation algorithm called MSLG, which can jointly generate soft labels using meta-learning techniques and learn DNN parameters in an end-to-end fashion. Knowledge distillation in deep learning and its applications - PMC Soft labels refers to the output of the teacher model. In case of classification tasks, the soft labels represent the probability distribution among the classes for an input sample. The second category, on the other hand, considers works that distill knowledge from other parts of the teacher model, optionally including the soft labels. Label Smoothing: An ingredient of higher model accuracy Your labels would be 0 — cat, 1 — not cat. Now, say you label_smoothing = 0.2 Using the equation above, we get: new_onehot_labels = [0 1] * (1 — 0.2) + 0.2 / 2 = [0 1]* (0.8) + 0.1 new_onehot_labels = [0.9 0.1] These are soft labels, instead of hard labels, that is 0 and 1. Learning from Noisy Labels with Deep Neural Networks: A Survey Learning from Noisy Labels with Deep Neural Networks: A Surve y Hwanjun Song, Minseok Kim, Dongmin Park, Jae-Gil Lee Abstract —Deep learning has achieved remarkable success in numerous domains with...

[1910.02551] Soft-Label Dataset Distillation and Text ... - arXiv.org Using `soft' labels also enables distilled datasets to consist of fewer samples than there are classes as each sample can encode information for multiple classes. For example, training a LeNet model with 10 distilled images (one per class) results in over 96% accuracy on MNIST, and almost 92% accuracy when trained on just 5 distilled images. Data Labeling Software: Best Tools for Data Labeling - Neptune Playment is a multi-featured data labeling platform that offers customized and secure workflows to build high-quality training datasets with ML-assisted tools and sophisticated project management software. It offers annotations for various use cases, such as image annotation, video annotation, and sensor fusion annotation. subeeshvasu/Awesome-Learning-with-Label-Noise - GitHub 2019-ICML - Combating Label Noise in Deep Learning Using Abstention. 2019-ICML - SELFIE: Refurbishing unclean samples for robust deep learning. 2019-ICASSP - Learning Sound Event Classifiers from Web Audio with Noisy Labels. ... 2020-ICPR - Meta Soft Label Generation for Noisy Labels. 2020-IJCV ... Understanding Deep Learning on Controlled Noisy Labels In "Beyond Synthetic Noise: Deep Learning on Controlled Noisy Labels", published at ICML 2020, we make three contributions towards better understanding deep learning on non-synthetic noisy labels. First, we establish the first controlled dataset and benchmark of realistic, real-world label noise sourced from the web (i.e., web label noise ...

Labelling Images - 15 Best Annotation Tools in 2022 Prodigy. Prodigy is a highly efficient and scriptable data annotation tool, which is very easy to use and can train an AI model in only a few hours. It has a faster data collection, a more independent approach, and is known to have a higher level of successful projects than other tools. PDF Unsupervised Person Re-Identification by Soft Multilabel Learning To overcome this problem, we propose a deep model for the soft multilabel learning for unsupervised RE-ID. The idea is to learn a soft multilabel (real-valued label likeli- hood vector) for each unlabeled person by comparing the unlabeled person with a set of knownreferencepersons from an auxiliary domain. Unsupervised deep hashing through learning soft pseudo label for remote ... We design a deep auto-encoder network SPLNet, which can automatically learn soft pseudo-labels and generate a local semantic similarity matrix. The soft pseudo-labels represent the global similarity between inter-cluster RS images, and the local semantic similarity matrix describes the local proximity between intra-cluster RS images. 3. Validation of Soft Labels in Developing Deep Learning Algorithms for ... Validation of Soft Labels in Developing Deep Learning Algorithms for Detecting Lesions of Myopic Maculopathy From Optical Coherence Tomographic Images The predicted possibilities from the models trained by soft labels were close to the results made by myopia specialists.

Loss and Loss Functions for Training Deep Learning Neural Networks Almost universally, deep learning neural networks are trained under the framework of maximum likelihood using cross-entropy as the loss function. Most modern neural networks are trained using maximum likelihood. This means that the cost function is […] described as the cross-entropy between the training data and the model distribution.

Label smoothing with Keras, TensorFlow, and Deep Learning This type of label assignment is called soft label assignment. Unlike hard label assignments where class labels are binary (i.e., positive for one class and a negative example for all other classes), soft label assignment allows: The positive class to have the largest probability While all other classes have a very small probability

Deep Learning from Noisy Image Labels with Quality Embedding Specially, it consists of two important layers: (1) the contrastive layer estimates the quality variable in the embedding space to reduce noise effect; (2) the additive layer aggregates prior predictions and noisy labels as posterior to train the classifier.

How to make use of "soft" labels in binary classification - Quora LDA or latent dirichlet allocation is a generative statistical model that allows sets of observations to be explained by unobserved groups that explain why some parts of the data are similar. It is basically used to extract words which describe a document. These topics can be used as a feature model for your binary classification.

Google AI Blog: Deep Learning with Label Differential Privacy In the standard supervised learning setting, a model is trained to make a prediction of the label for each input given a training set of example pairs {[input 1,label 1], …, [input n, label n]}. In the case of deep learning, previous work introduced a DP training framework, DP-SGD , that was integrated into TensorFlow and PyTorch .

How To Label Data For Semantic Segmentation Deep Learning Models? Labeling the data for computer vision is challenging, as there are multiple types of techniques used to train the algorithms that can learn from data sets and predict the results. Image annotation...

(PDF) Deep learning with noisy labels: Exploring techniques and ... In this paper, we first review the state-of-the-art in handling label noise in deep learning. Then, we review studies that have dealt with label noise in deep learning for medical image analysis....

The structure of (a) traditional knowledge distillation and (b) feature... | Download Scientific ...

Label-Free Quantification You Can Count On: A Deep Learning ... - Olympus Although it shows excellent correspondence between the two methods, the total number of objects detected with deep learning was around 3% higher. Figure 2: Nuclei detected using fluorescence (left), the corresponding brightfield image (middle), and object shape predicted by deep learning technology (right).

Robust Training of Deep Neural Networks with Noisy Labels by Graph ... The averaged labels are soft-labels and capture the degree of confidence of each sample belongs to a certain class. Loss Function. The proposed method trains the DNNs model with the following loss function constructed by three terms:

List of Deep Learning Layers - MATLAB & Simulink - MathWorks crop2dLayer. A 2-D crop layer applies 2-D cropping to the input. crop3dLayer. A 3-D crop layer crops a 3-D volume to the size of the input feature map. scalingLayer (Reinforcement Learning Toolbox) A scaling layer linearly scales and biases an input array U, giving an output Y = Scale.*U + Bias.

Deep Learning Series - Session 2: Automated and Iterative Labeling for Images and Signals Video ...

MetaLabelNet: Learning to Generate Soft-Labels from Noisy-Labels - DeepAI Soft-labels are generated from extracted features of data instances, and the mapping function is learned by a single layer perceptron (SLP) network, which is called MetaLabelNet. Following, base classifier is trained by using these generated soft-labels. These iterations are repeated for each batch of training data.

Softmax Classifiers Explained - PyImageSearch Inside PyImageSearch University you'll find: 45+ courses on essential computer vision, deep learning, and OpenCV topics. 45+ Certificates of Completion. 52+ hours of on-demand video. Brand new courses released regularly, ensuring you can keep up with state-of-the-art techniques.

Meta Soft Label Generation for Noisy Labels - arxiv-vanity.com The existence of noisy labels in the dataset causes significant performance degradation for deep neural networks (DNNs). To address this problem, we propose a Meta Soft Label Generation algorithm called MSLG, which can jointly generate soft labels using meta-learning techniques and learn DNN parameters in an end-to-end fashion. Our approach adapts the meta-learning paradigm to estimate optimal ...

Post a Comment for "42 soft labels deep learning"